At GoLead, I work as a Solution Architect, designing and implementing AI-driven learning solutions for the EdTech and L&D sectors. I initially joined as an AI Engineer, but my role quickly expanded into solution architecture and AI consulting, where I now bridge technical strategy with product innovation.

Our product, GoPrep (used to be called GoPlus before rebranding) is an innovative platform focused on enhancing learning experience for higher education through cutting-edge technologies like Machine Learning and Generative AI. This platform supports educators and learners by offering adaptive assessments, custom content authoring, and data-driven insights to optimize educational experiences.

In this Project Log I will explain:

- What Is our product, and What our AI does (User Journey)

- How we analyze the data we have collected (Case Study)

- How did I build the AI service (Solution Architecture)

So let's talk a little about the platform before going in depth with my experience and contributions...

What is GoPrep? Who is Zacky?

GoPrep is GoLEAD’s AI‑powered courseware platform. It helps instructors design courses, assess learning, and act on analytics, while students get personalized study plans and an engaging learning experience. To explain how we made that possible, I should break down the main features we offer in this product, and I will explain how our AI, Zacky, will help the stakeholders in every step which is the main part of my work in the Generative AI section...

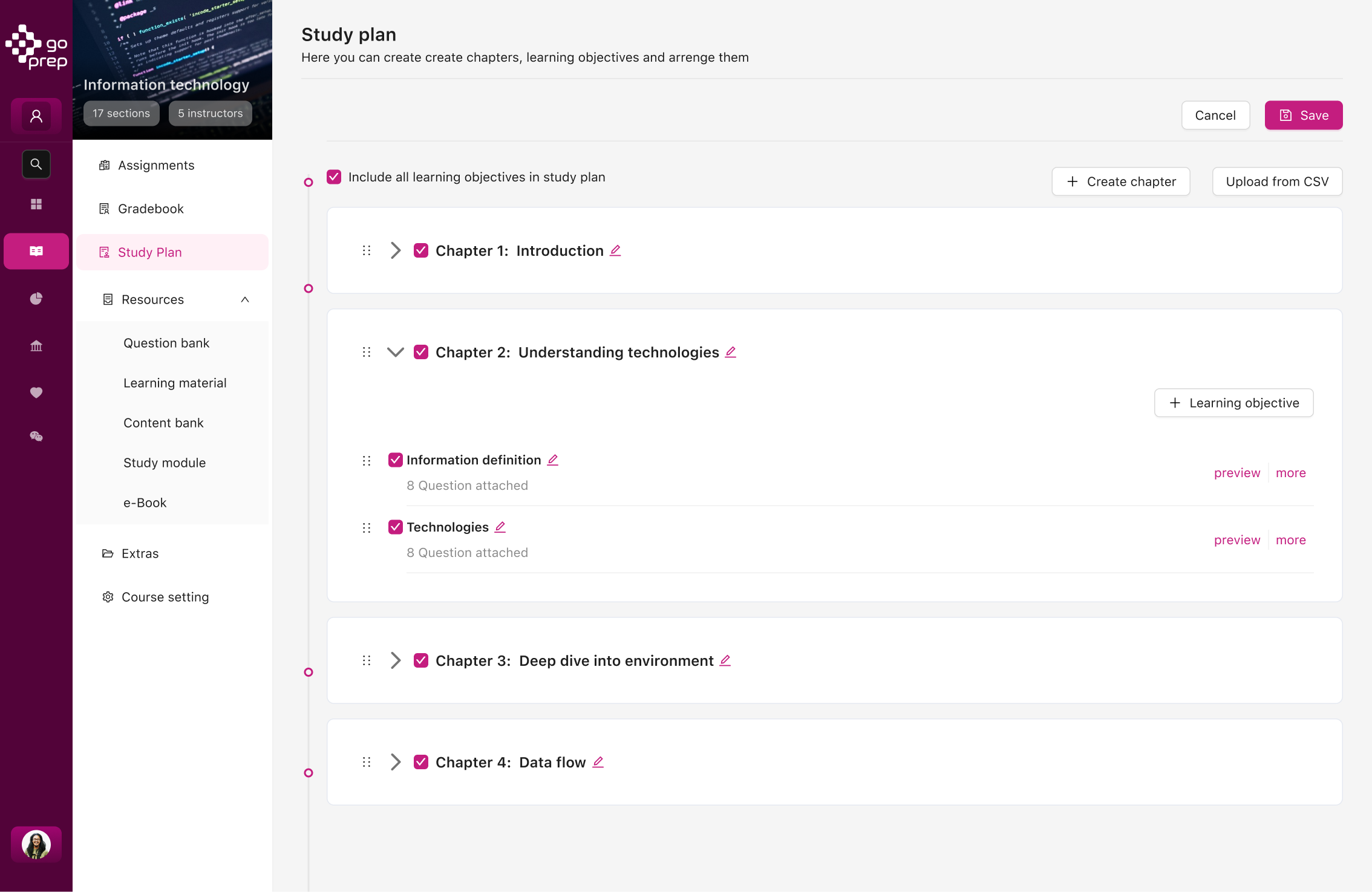

Study Plan & Learning

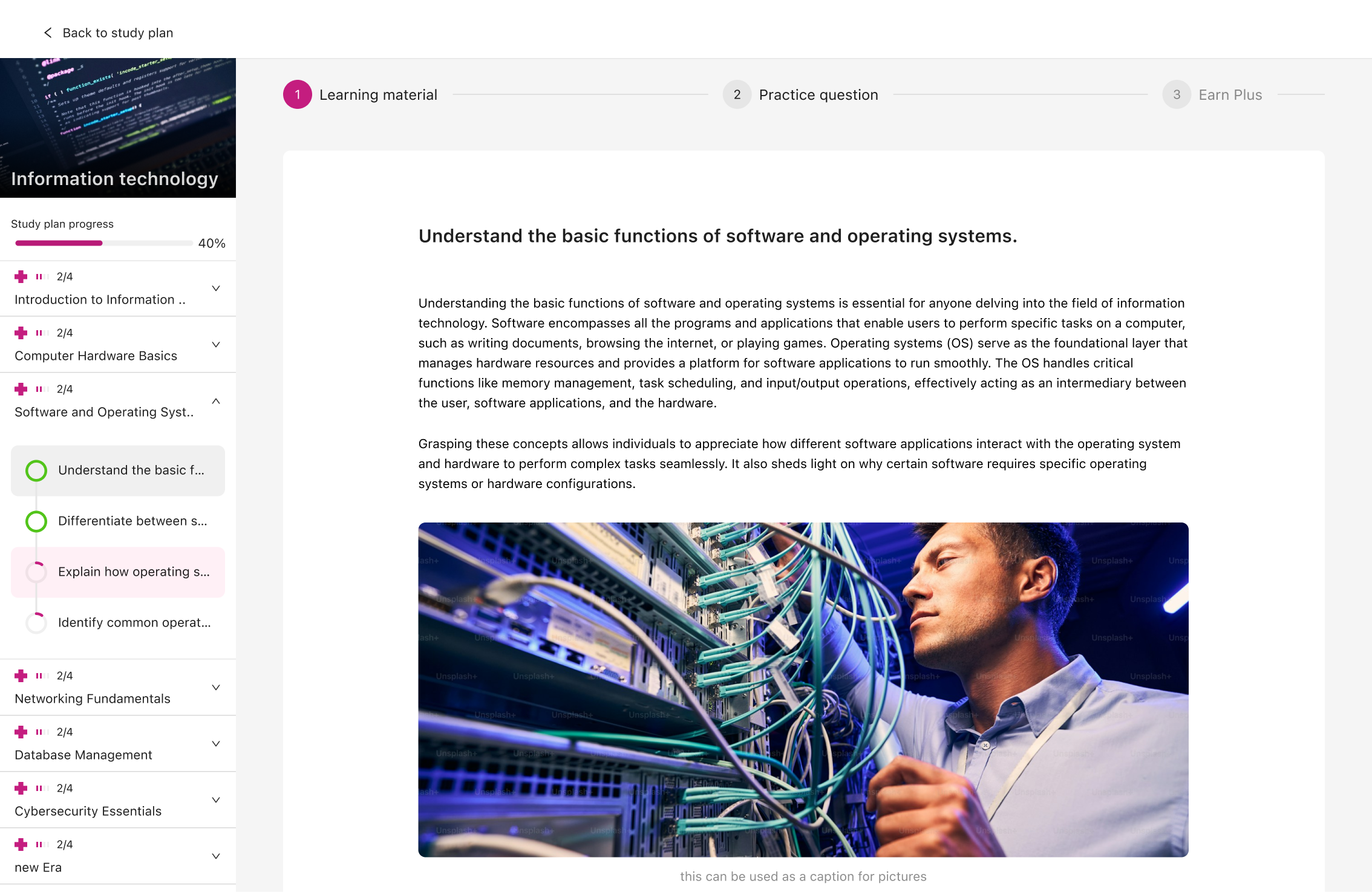

GoPrep’s Study Plan is where a course takes shape and the materials become learnable. Instructors organize chapters and learning objectives, then compose materials with a notion style, block editor, where you can include text, images, video, voice overs, and embedded tools like calculators and spreadsheets. This flexible learning module makes the plan and the content live together.

On the Student side, each objective flows the same way every time: first learn, then practice, then a quick EarnPlus (It's the gamification we have, that makes you first earn some amount of points before moving forward) to self evaluate. It’s responsive across devices and we have the plan to make it possible for live teaching too, with Jam Sessions that let you present the exact materials you authored in an interactive class setting.

Our AI will help you with creating the course blueprint using your materials, and writing the blocks, or directly extract them from the resources you provide.

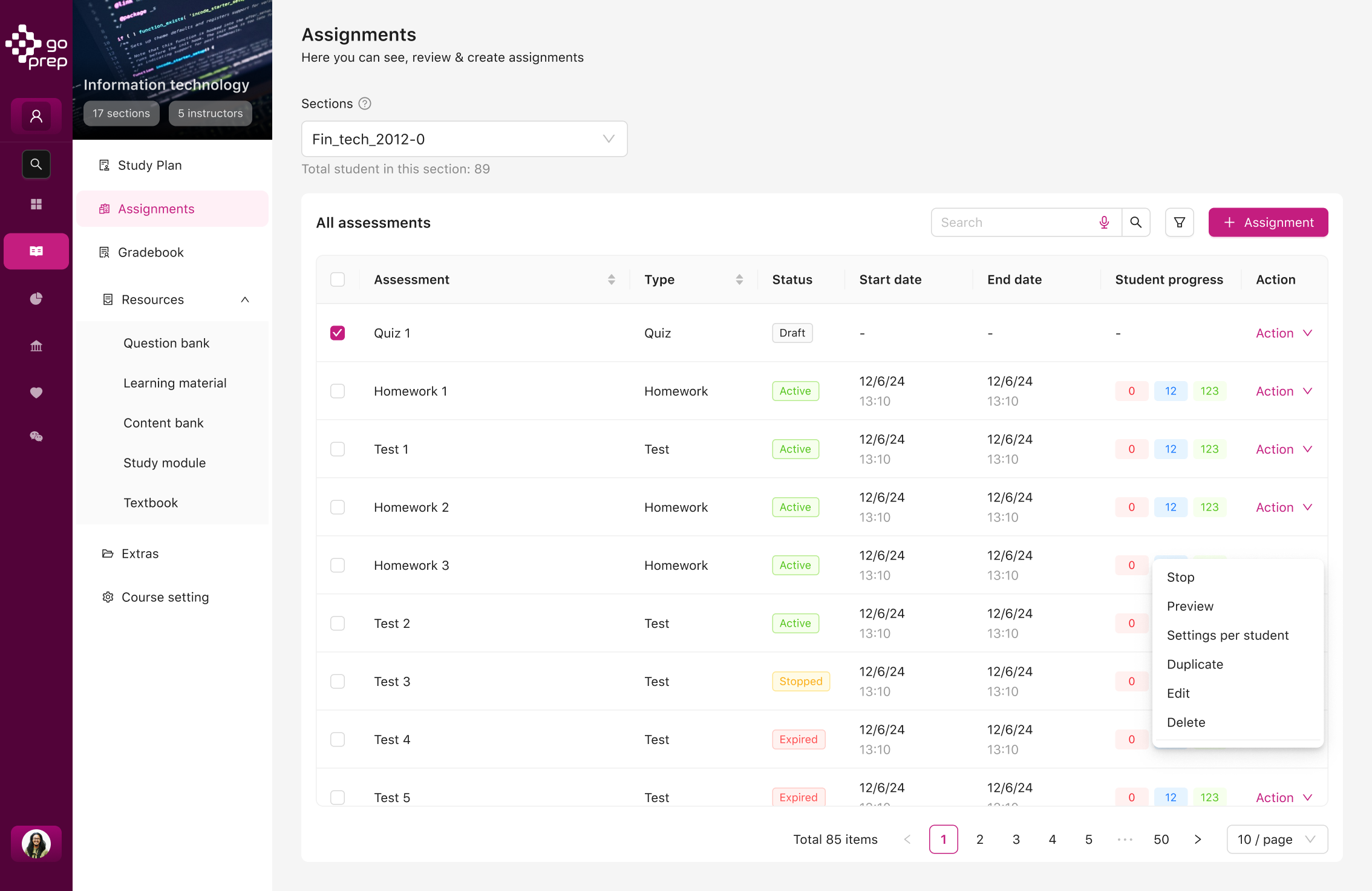

Assessments

Assessments sit on that foundation and come in four formats: Quiz, Test, Exam, and the EarnPlus we talked about. Instructors can run them online with customizable policies. We enabled the option of question pooling groups items by concept and difficulty and can randomize selection to keep things fair.

We support 10 different types of questions for these assessment:

- True or False

- All that applies

- Multiple Choice

- Ranking

- Matching

- Fill the Blanks

- Long Answer (Essay)

- Numeric Questions (Supports Mathematical Notations and Single Numeric Answer)

- Tabular Questions (Using Excel like Spreadsheets)

- Hotspot (Finding answer in an image)

Our AI, can Design Questions from Learning Materials, Extract them directly from your resources (PDF or CSV), and Generate Similar Questions from the parent questions you will add to the Question bank, while maintaining the same scope and level of difficulty.

Ebook

You have the option to add an Ebook to your course, as the reference for students, so they can read them, highlight parts and add notes to it.

Our AI let's Instructors to design the course and materials directly from this book, reference the learning material to different parts of the book, and also let's the Students to interact and ask questions from the book using our chatbot, and even ask Zacky to add practice assessment for them.

Our AI supports almost all formats of files that you might have, including: PDF, EPUB, DOC/DOCX, Markdown, RTF/TXT, and HTML

Analytics

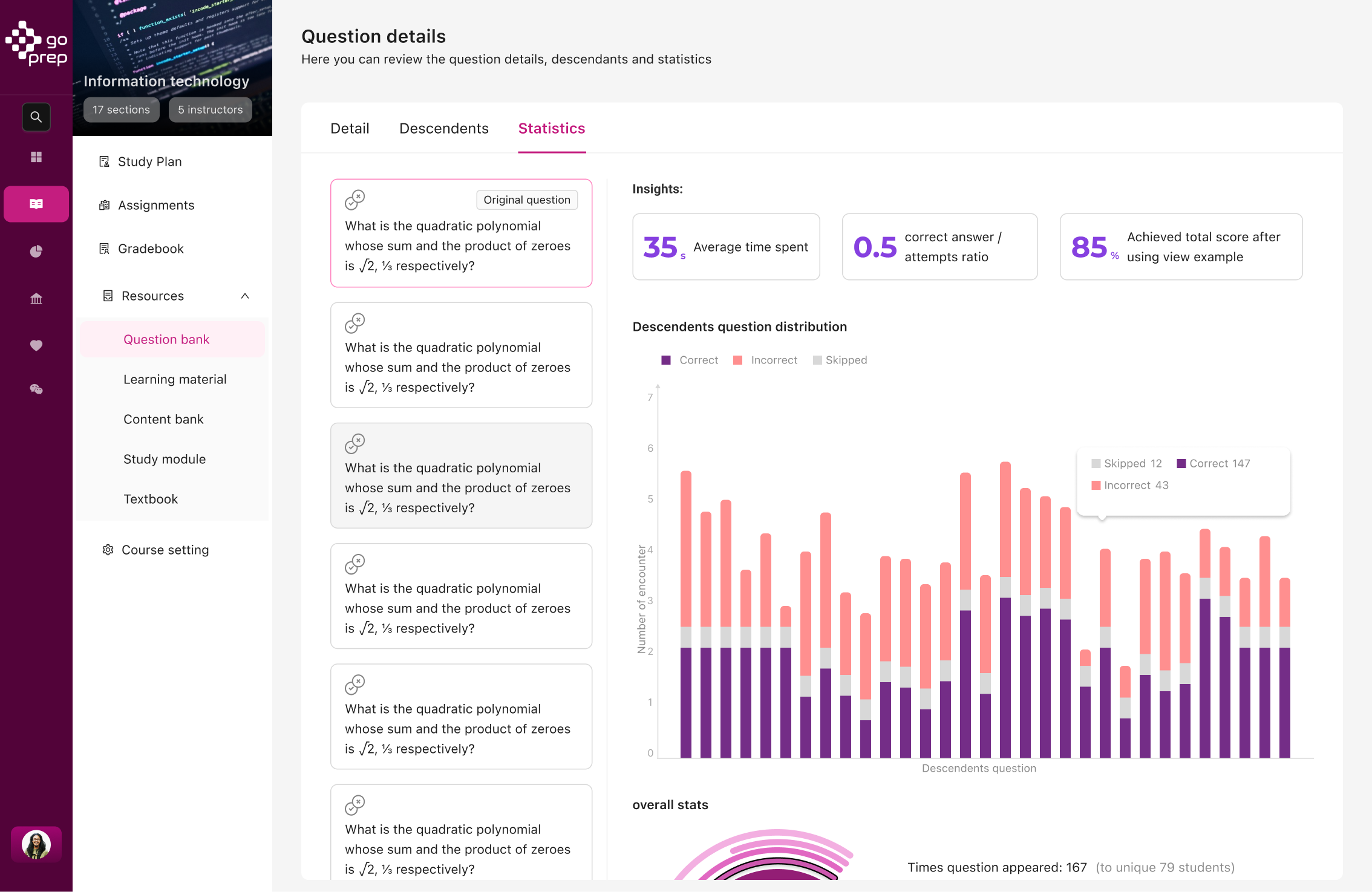

Analytics closes the loop by turning activity into decisions. Dashboards connect questions, assignments, and progress so you can see performance at a glance or drill into the details. We analyze Parent questions and their AI‑generated Descendants to understand concept‑level mastery, track participation and outcomes as assessments run, and surface trends in gradebooks and student result pages. This includes engagement time and learning‑objective performance. The goal is to make “what’s happening” and “what to do next” equally obvious for instructors and learners.

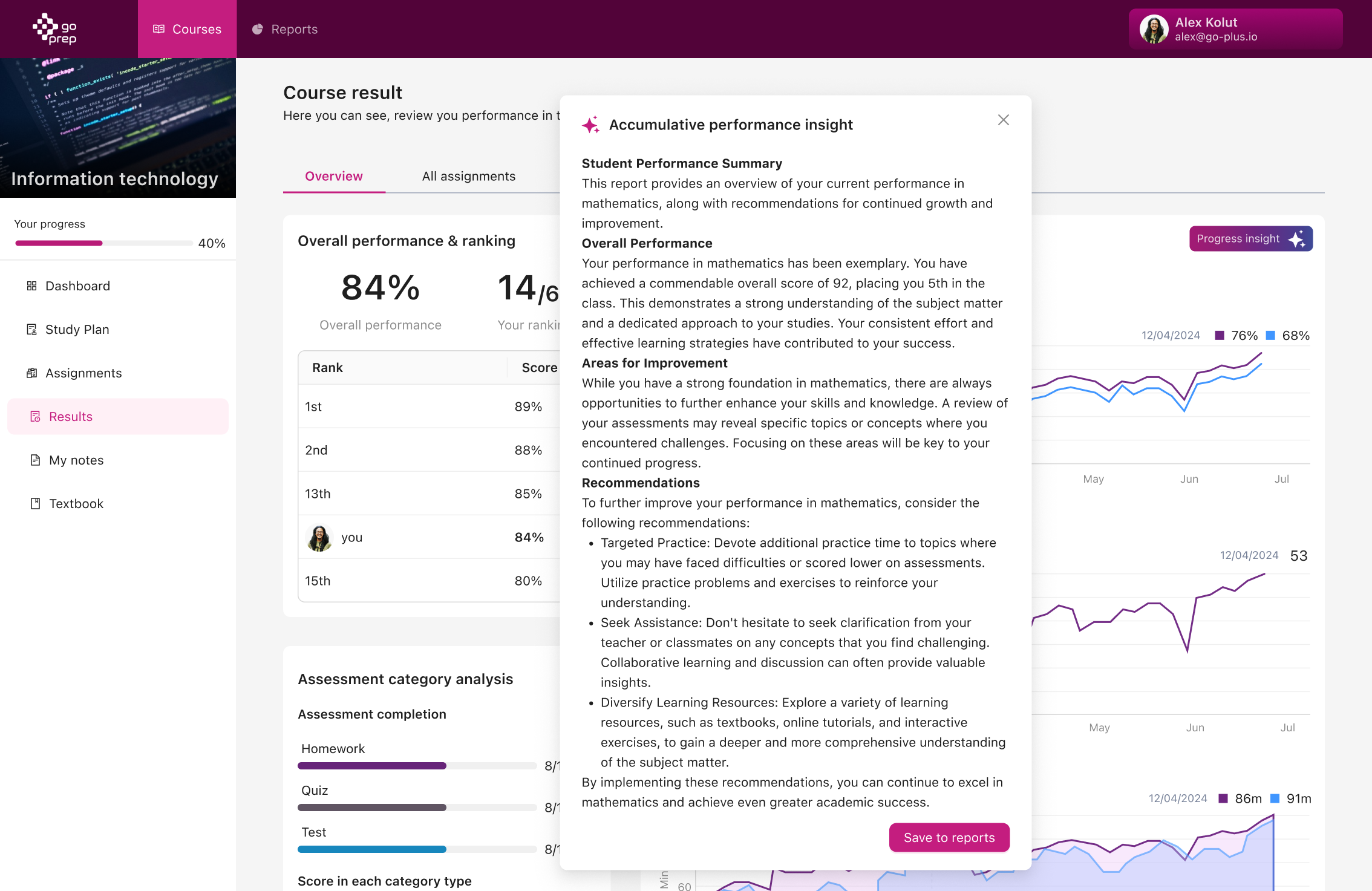

Our AI comes to play in two different ways for analytics:

1- As a pop-up to help you understand the numbers and the charts you see on the screen, using buttons on each chart.

2- As an assistant that you can ask to dig deeper into the data, and show you the knowledge gaps, most performing students and many more functionalities in a form of chatbot

What do we do with the Data behind the scenes?

All I have explained so far, was the Generative AI part, that mostly handles the customer front features. But my job doesn't end here, it actually begins. The goal is creating more effective learning experiences by analyzing patterns in student data and using these insights to implement targeted interventions and improvements to the platform. I ran data studies to inform “Zacky Insights” and the adaptive engine. Here, I will share a brief about one of our studies after we completed a pilot we had with King Saud University (KSU), that answers three critical questions:

- How our AI helped the student/instructors?

- How repeated practice (“Earn Plus”) translates to test performance.

- How study timing relates to outcomes.

In this Pilot, the total number of students was 700, and overall, they spent almost 39,000 hours on the platform (that's about 55 hours per student) only on the assignments. This high level of engagement is because of the high number of questions we had in the database, which was not possible without our AI. The total number of questions created by the instructor himself was only about 200, and we generated on average 10 similar questions for each using AI, so we can state that we have saved more than 90% of the instructor's time by automating that part using our AI.

This metric gets even better in the current project we have with another university, because they don't even need to manually create questions, they just upload a PDF, and our AI extracts the questions, adds them to the database, and creates similar questions automatically. And that's only our contribution, to enhance the experience for Instructors.

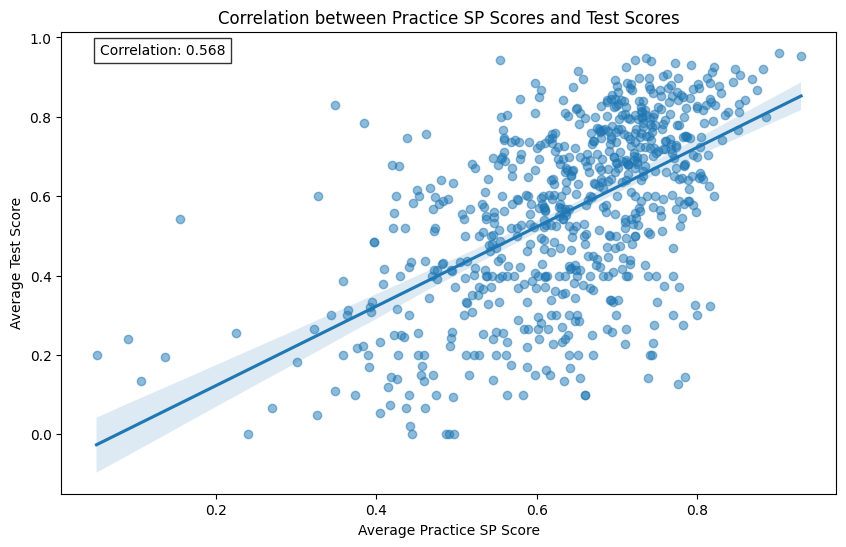

Regarding the improvement of the quality of learning, which shows how Students benefit from our AI, I did some analysis on how repeated practice (which we call Earn Plus) impacts students accuracy and time management in their assignments (including final exams), and have observed some positive correlations with test scores, meaning better scores on homework and study plan tasks are generally associated with higher test scores.

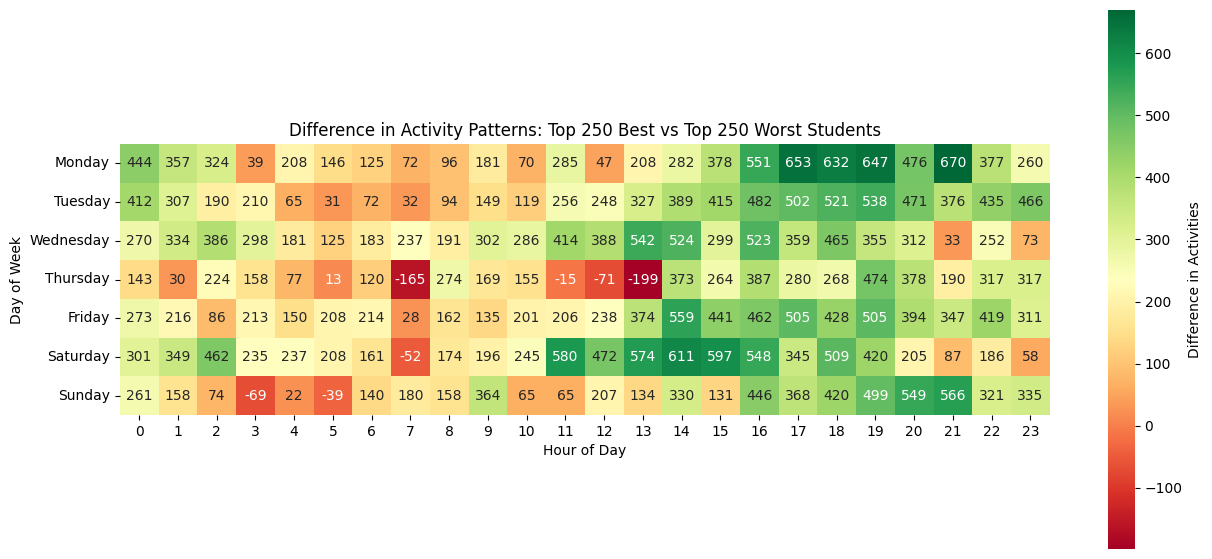

Now, as you see It's not a perfect linear distribution, which suggests that other factors (such as the time they spent in other parts of the platform, like learning and ebook) might also significantly influence exam performance. So i started analyzing the relationship between students’ study behaviors and their academic outcomes (final exam score). The goal was to highlight the importance of when, how often, and for how long they engage in learning activities. Once we compare the best and worst students in exams, we can see differences in their study patterns:

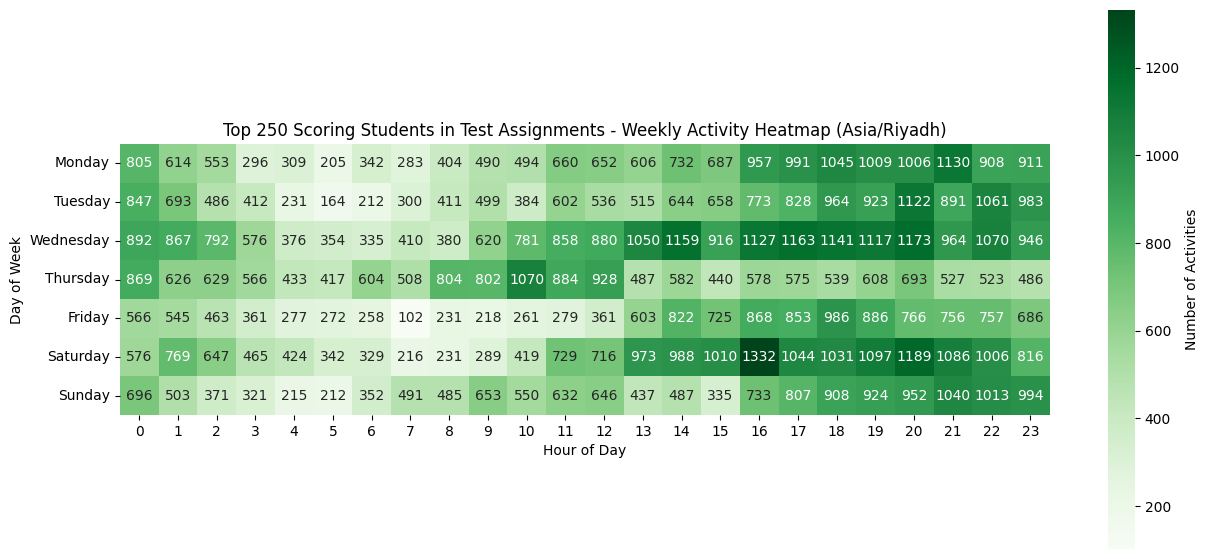

Here how top students spent time in the platform:

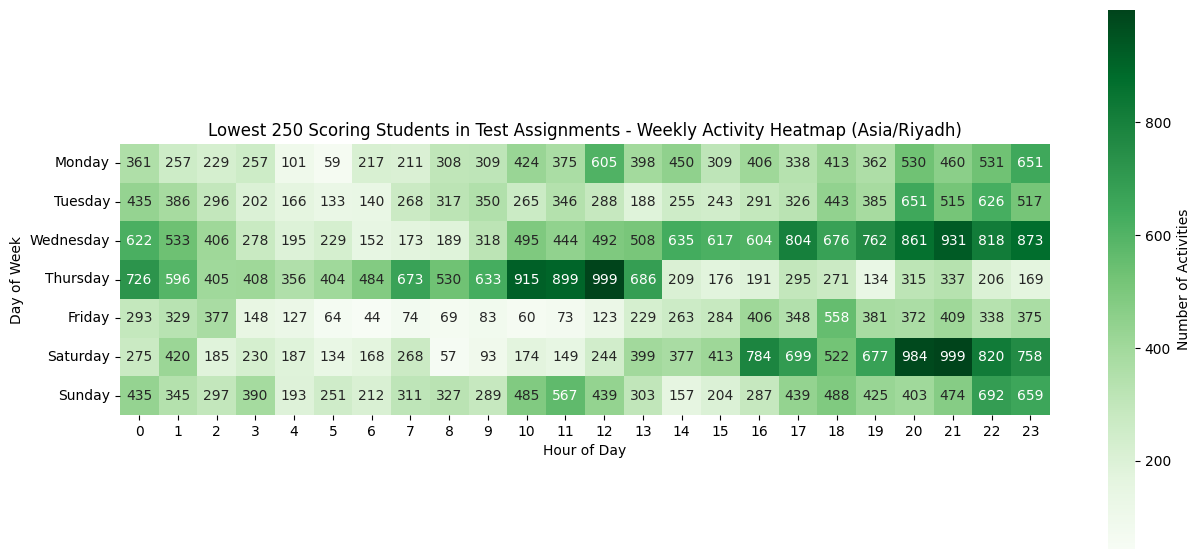

And this is how low performers spent their times:

And once we put it all together, everything becomes crystal clear:

Green boxes: times when the best‑performing students were active.

Red boxes: times when the worst‑performing students were active.

We can clearly see, during afternoon hours (12 PM–6 PM), best‑performing students were more active, especially on weekdays, suggesting higher productivity or focus. Conversely, late night/early morning (like 4–6 AM on Thursday) shows more activity for worst‑performing students, implying fatigue, cramming, or last‑minute attempts. (For more accurate analysis I exclude Test and Quiz activities from the activity windows to avoid tautological patterns).

Why that matters to us?

By examining patterns in study sessions alongside performance results, valuable insights can be uncovered to guide more effective learning strategies. The aim is to understand how timing, frequency, and duration of study contribute to success, and then translate these findings into practical tools such as personalized reminders and tailored learning plans. This involves collecting and analyzing data on student activities and applying statistical methods to reveal meaningful correlations and potential causal links, ultimately informing approaches that foster improved performance.

The Solution Architecture

Once I joined the team, there was an attempt to enable the AI in the platform, but it wasn't working properly. The whole Generative AI (LLM) was a new trend, and there was not analytics, only data visualizations. So I decided to take the responsibility of the AI part compeletly, and this is how, and why I built it:

From Monolith to Microservice

GoPrep was a classic Django monolith: one repo, one runtime, one database. It was productive early on, but as soon as we pushed hard on AI features the system started to feel like a physics problem. The amount of computation required by question generation, document processing, and retrieval made the entire stack grow heavy, for example CPU and memory‑intensive jobs were competing with everyday traffic.

I proposed turning Zacky (our AI layer) into a separate service so we could scale it on its own axis, isolate failures, and choose technology that fit the problem instead of forcing AI workloads through a courseware frame.

The second pressure was velocity. Different teams were shipping fast, but their work landed in the same process space and deploy pipeline, so features stepped on each other. Having Zacky as its own service let us decouple development and release cadences. I could iterate on agents, prompts, and async pipelines without worrying that a long‑running extraction task would slow down a live exam or a nightly report.

The clean boundary also made contracts explicit: GoPrep calls Zacky over APIs, Zacky guarantees response shapes and SLAs.

Finally, we wanted a business path beyond the platform itself. By carving Zacky out, we could monetize it as a SaaS for other EdTech providers likeLMSs and courseware tools that have content and users, but no AI. That meant thinking like a platform from day one: multi‑tenancy, metering, per‑tenant isolation, and an integration surface that’s not tied to GoPrep’s internal models or UI assumptions.

Tech Stack

GoPrep is built on Django with PostgreSQL, a solid, batteries‑included web framework and a reliable relational store. For courseware flows (auth, enrollments, assignments, grading, schedules), Django’s conventions are productive and Postgres is great for transactional integrity and analytics‑friendly SQL. But for Zacky I needed a different shape.

I chose FastAPI because Zacky’s job is to expose clean, high‑performance endpoints (often streaming) rather than render templates or manage admin views. FastAPI’s async‑first model, type‑hinted contracts, and OpenAPI generation made it simple to stand up multiple access patterns (REST, gRPC, WebSocket) with low overhead, and to evolve the API quickly as the agent design matured.

On storage, We kept Postgres for the monolith and introduced MongoDB for Zacky. Early AI platforms live with changing schemas: new tool calls, evolving prompt configs, variant question shapes, and per‑tenant overrides. Mongo’s document model let me store these without schema thrash, handle unstructured artifacts (intermediate tool outputs, trace logs), and scale horizontally by service or tenant. It also made it easy to split resources, so heavy tenants can live on their own cluster while lighter tenants share. For retrieval, I paired this with a vector store (ChromaDB) so the system can embed documents and search semantically.

The result is a clean separation: Postgres for durable courseware records, and Mongo + Chroma for Zacky’s flexible, AI‑native data.

Operationally, Redis + Celery handle long‑running work: PDF extraction, text chunking, vector embedding, bulk question generation. Offloading these to workers prevents request threads from blocking and lets us scale workers elastically during ingestion spikes. For real‑time interactions (chat, streaming generation), FastAPI’s WebSocket endpoints keep latency low while the heavy lifting happens asynchronously in the background.

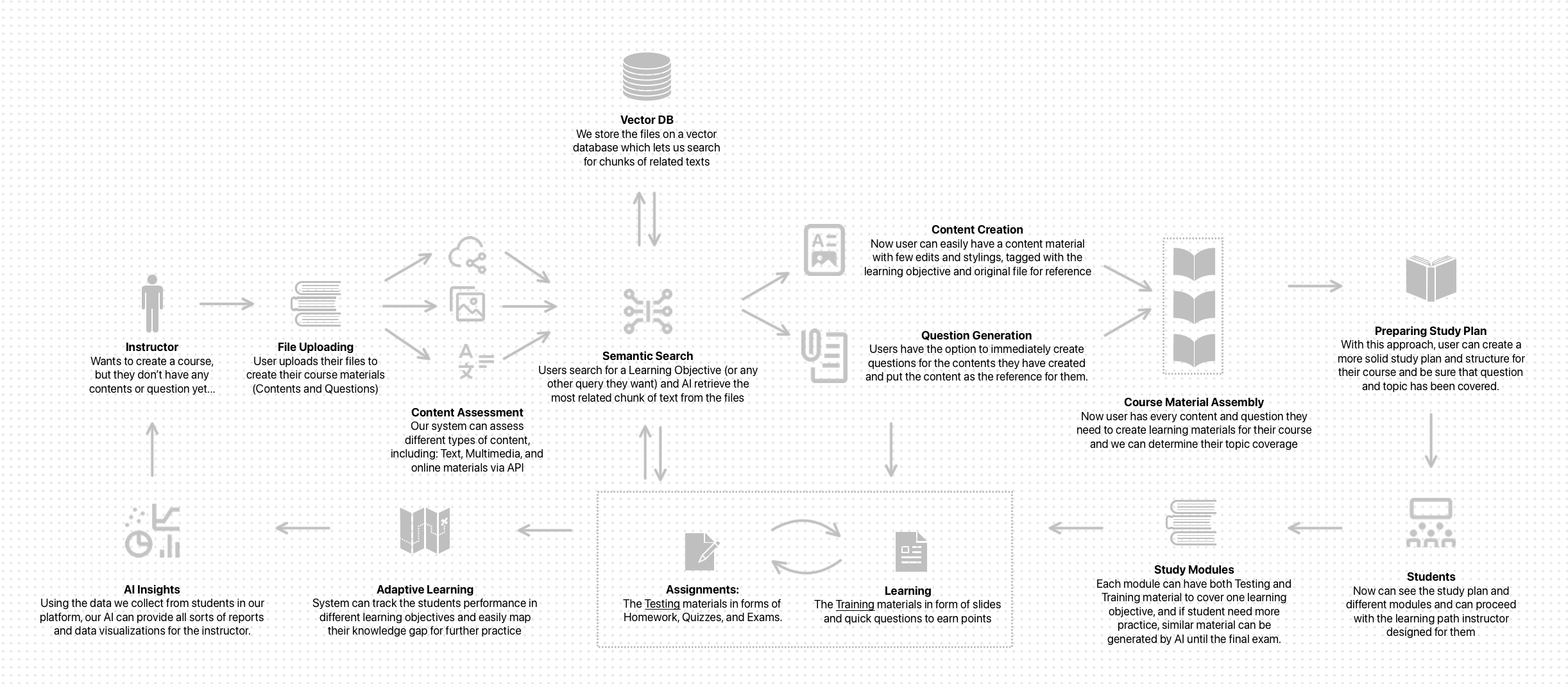

The Architecture (The high‑level system)

The instructor journey starts simple: someone wants to create a course but they don’t have content or questions yet. They upload PDFs or other files; we index them, store raw files, and pass the text through a content assessment step that can understand multiple formats. Those artifacts flow into a vector database so that later, when a user asks for a particular learning objective, the system performs semantic search to pull the most relevant passages.

From there, Zacky switches to creation mode. Using the retrieved snippets as grounding, it drafts learning materials, tagged by objective, and linked back to the source. In the same pass or as a follow‑up, Zacky generates assessment items aligned with the content, again referencing the source text for explainability. With content and questions in hand, the course material assembles into modules; instructors can review, tweak, and then roll the materials into a Study Plan. On the learner side, each module presents training materials and quick checks; if a student needs more practice, the system can spin up parallel variants until they reach mastery.

Once students begin interacting, telemetry feeds back into analytics: performance by objective, time on task, participation patterns, and question‑level outcomes including the lineage from a Parent question to its Descendants (AI‑generated variants). Those signals drive adaptive learning and instructor dashboards, and they also flow back into Zacky to refine generation and recommend the next best action.

The loop is intentional: ingest → generate → learn → measure → adapt.

The Agentic Core (how Zacky works)

Under the hood, Zacky runs an agent system that coordinates retrieval, generation, and chat. The Main Agent owns the conversation and decides which tools to invoke. A Query Agent specializes in retrieval, crafting search queries, calling the vector store, and returning grounded context. The Main Agent then chooses whether to draft content, generate questions, answer a student, or call back out for more context. Long‑running steps such as PDF extraction and embedding are queued to workers so the UI can stream progress and partial results.

A typical request flows through FastAPI’s routers into services, which either route to the agent system (for generation and chat) or enqueue a task for the async layer. The agent consults the vector store via the Query Agent when it needs grounding, then produces structured outputs—validated against Pydantic models, so GoPrep can consume the results without glue code. Throughout, logs and traces are written per tenant, and rate limits/auth tokens are enforced at the edge so Zacky can be offered safely as a service.

Results

Separating Zacky gave us crisp boundaries and room to grow. We could autoscale ingestion workers without touching exam traffic, throttle and meter per tenant, and ship AI features on their own release train. More importantly, it made the business modular: GoPrep remains a strong courseware product, and Zacky becomes a platform that any learning system can adopt. The result is a cleaner architecture for us and a clearer story for partners, use what you need, where you need it, without inheriting the rest of our stack.

Find More of My Works

Get in Touch

Ready to bring your ideas to life? Get in touch to discuss your project and see how we can create something amazing together.